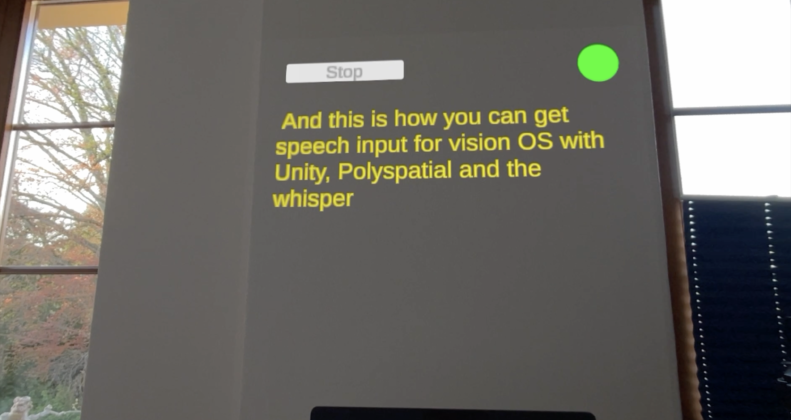

🎮 Hello XR Developers! Today’s tutorial is all about incorporating the visionOS system keyboard and AI-driven speech-to-text into your Unity UI projects. Let’s break down the process into manageable sections to get you started quickly! Setting Up Your Unity Scene Adjusting and Positioning UI Components Building and Launching on Device Interacting with UI Components Installing […]

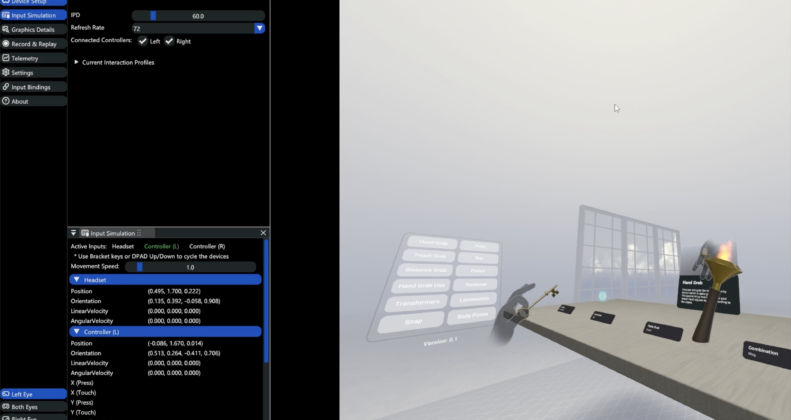

Hello XR Developers! 🌟 Today, we’re diving into an exciting tool that’s revolutionizing the way we test and develop XR applications—Meta’s XR Simulator. This powerful feature within Unity allows developers to simulate Meta Quest experiences directly from the Editor, without needing the physical device. It’s a game-changer, especially for those developing on Windows machines. 🛠 […]

Introduction Hey XR Developers! In today’s tutorial, we’re diving into creating custom hand gestures using Unity’s XR Hands package and the new Hand Gesture Debugger. This feature opens up a world of immersive gameplay possibilities beyond the standard gestures like pinch, poke, grab, and point. Let’s explore how to craft unique hand interactions for your […]

Recent Comments