Hello XR Developers! Today, we’re diving into a feature you’ve all been eagerly anticipating: access to the Meta Quest Passthrough Cameras, now unlocked with Horizon OS v74. This new API lets you tap into the raw RGB camera feeds on Quest headsets—perfect for real-time environment sampling, AR overlays, and even object detection. In this guide, […]

Hello XR Developers! Today, we’re diving into a powerful new way to create colocated experiences using Shared Spatial Anchors and Colocation Discovery with Meta’s latest XR Core SDK. Starting with version 71, Meta has simplified the process significantly. In this tutorial, we’ll guide you step-by-step through the setup and explain how to use these tools […]

Hello XR Developers! Meta’s MR Utility Kit (MRUK) adds powerful utilities on top of the Scene API to simplify building spatially-aware apps. It provides tools for ray-casting against scene objects, finding spawn locations, placing objects on surfaces, and achieving dynamic lighting effects. In this quick overview of MRUK v71, we’ll explore exciting new features. If […]

Hello XR Developers! Today, we’re taking a deep dive into creating your very own drawing app using the Logitech MX-Ink stylus with Meta Quest. If you’re familiar with apps like ShapesXR or PaintingVR, you’ll love this new way of sketching and painting inside your headset. In this tutorial, we’ll walk through how to set up […]

Hello XR Developers! In today’s tutorial, we’re diving into how to integrate Meta’s Voice SDK with Large Language Models (LLMs) like Meta’s Llama, using Amazon Bedrock. This setup will allow you to send voice commands, process them through LLMs, and receive spoken responses, creating natural conversations with NPCs or assistants in your Unity game. Let’s […]

🎮 Hello XR Developers! Today’s tutorial is all about using Meta’s Voice SDK to define and understand intents, create a wake word, and capture the transcript of what we said after the wake word was heard by our system. Let’s break down the process into manageable sections to get you started quickly! Setting Up wit.ai […]

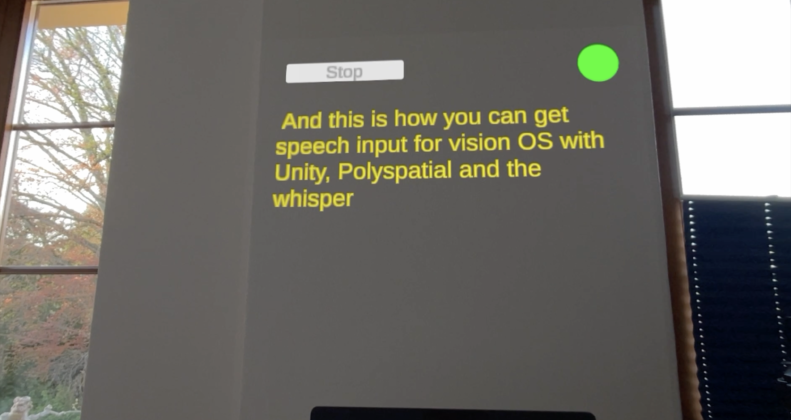

🎮 Hello XR Developers! Today’s tutorial is all about incorporating the visionOS system keyboard and AI-driven speech-to-text into your Unity UI projects. Let’s break down the process into manageable sections to get you started quickly! Setting Up Your Unity Scene Adjusting and Positioning UI Components Building and Launching on Device Interacting with UI Components Installing […]

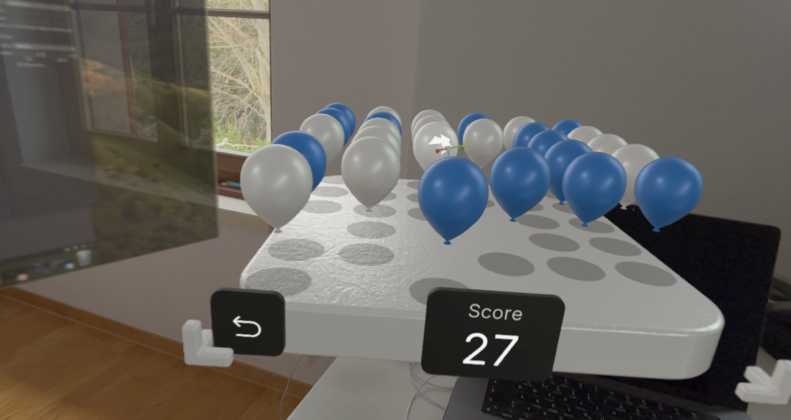

Introduction 🌟 XR Developers, get ready to dive into 3D touch input for VisionOS devices using Unity! This comprehensive guide will walk you through setting up your project, testing scenes on your device, and implementing sophisticated input actions to bring your Unity applications to life. Whether you’re looking to pop balloons in a gallery or […]

Hello XR Developers! Today, we’re diving into the latest PolySpatial update for Vision Pro, focusing on integrating SwiftUI windows for interactive Unity scenes. This breakthrough allows us to blend native SwiftUI elements with Unity, offering seamless control over mixed reality applications. 🌟 Setting the Stage To kick things off, ensure your toolkit is up to […]

Recent Comments